Viimeisimmät artikkelit

Defence in depth: Securing Azure App Service with Azure Front Door WAF, NodeJS runtime Security enhancements tested with OWASP ZAP

I’ve been recently playing around with Azure Front Door, and it’s WAF Policies. Here are some notes I decided toLue lisää

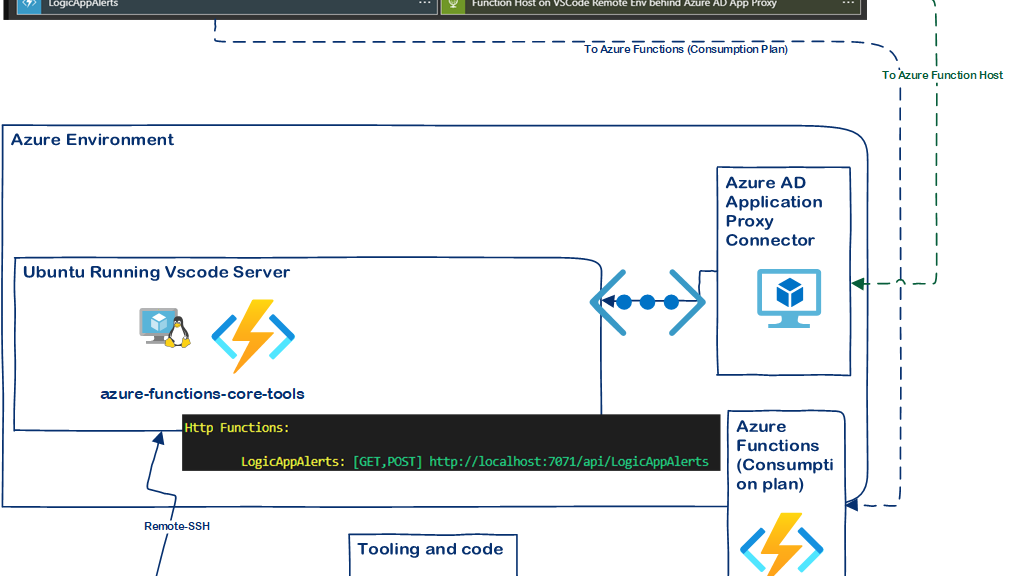

Developer experience on steroids with Azure AD App Proxy and Azure Functions Host

I’ve been using Azure AD App Proxy a lot in my time and I continue finding it useful in newLue lisää

TOP3 Picks from Azure Security Center Standard

I was recently discussing with a another Azure Aficionado about value proposition of Security Center Standard in comparison to stayingLue lisää

Azure AD B2X is here ! (yes B2X, not B2C or B2B) – Debugging and insights

Now that we are past the click-bait title (B2X), lets dig into Azure AD External Identities which was unveiled atLue lisää

Deep Diver – Azure AD Groups/Roles claims for developers and IT pro’s with code examples

Background Many enterprise applications rely on group /role information to be passed on assertions for authorization, and further role decisions.Lue lisää

Lab: Zero Trust Exchange 2016 with AAD oAuth2 and SAML (KEMP)

Welcome to the lab post regarding implementing ”Zero Trust”, or identity perimeter-ish controls for your’re hybrid environment: this part isLue lisää

Don’t try this at home (or how to enable Core Server Remote Management for AD FS GUI)

I’ve been running AD FS on Core servers for some time now, mostly because I like the smaller footprint and centralizedLue lisää

Azure Traffic Analysis with Kusto: Harnessing the capabilities of Kusto Query Language for in-depth Azure traffic analysis of IP addresses

I am preparing a talk for 2024 to showcase the capabilities of Kusto. As part of my preparation, I haveLue lisää

WSL stuck with 100 percent CPU?

WSL stuck at 100% percent when using VSCode. Brute force, it just works https://github.com/microsoft/WSL/issues/8529#issuecomment-1263463528 (thanks for the Github poster!) SomeLue lisää

Quick way to test Azure AD Certificate Based Authentication

This is a ”quick & dirty” way to enable essential testing of Azure AD CBA in test environments. Do notLue lisää

Microsoft Sentinel – Experimenting with Tactics and Techniques mapping from events to incidents via Analytics rules

I was recently working with community members to test mapping of tactics and techniques to events in Azure Sentinel createdLue lisää

Avoiding Consent to MS Graph PowerShell with Azure CLI: A Step Towards Simpler Operations and Adversary Simulation

When working with Microsoft Graph PowerShell, it’s often necessary to consent to specific scopes, which requires administrative approval. However, withLue lisää