Backround

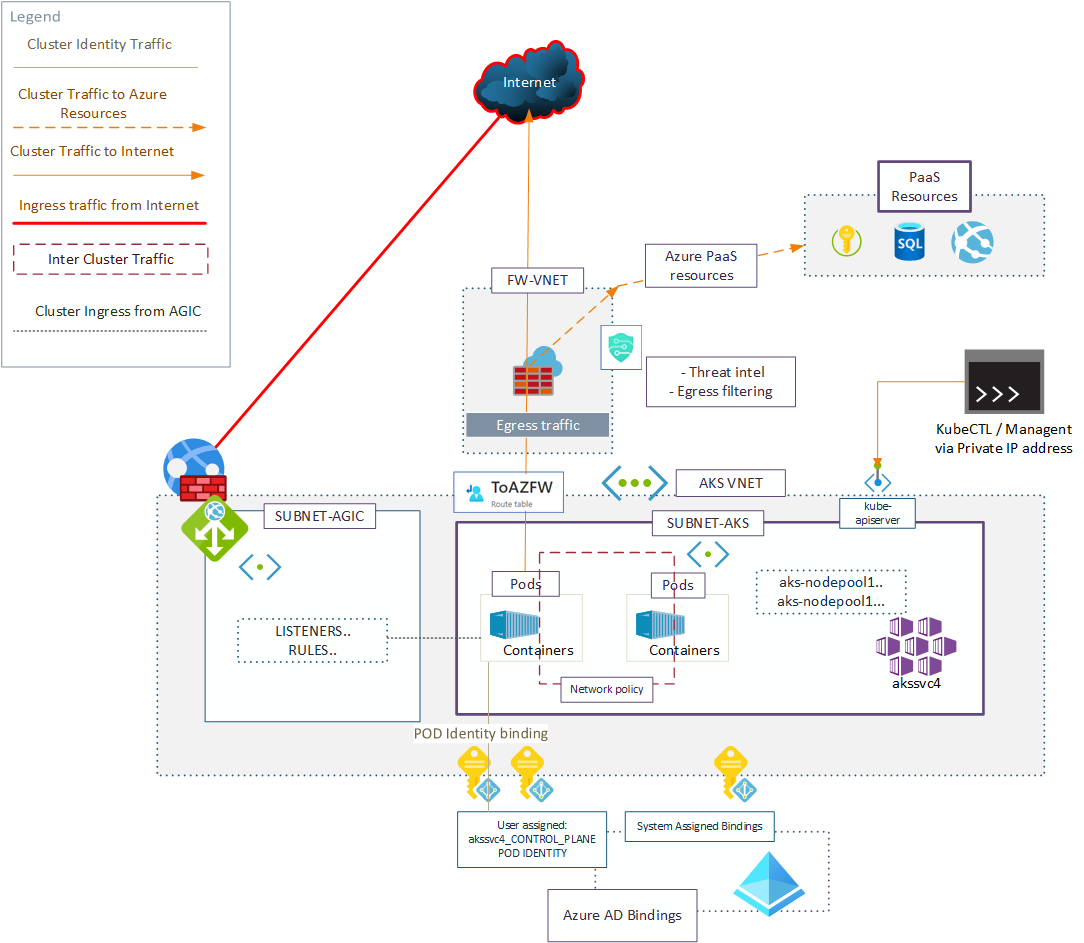

Background architecture is similar to one defined by Microsoft in AKS Security Baseline, with bit of simplification as Ingress connections bind directly to POD’s IP via AGIC configuration (without specific ingress service configuration)

Documents purpose is to give brief overview for integration possibilities with Azure Firewall, and various levels of network and web security in AKS, mostly referencing MS Docs, and simplified design pattern drawn here.

Solutions chosen for various areas concerning this post

Cheat Sheet

| Service | Web Application Firewall | Advanced Egress Filtering | Inter-Cluster Network Security | Threat Intel |

|---|---|---|---|---|

| Azure Application Gateway | ✅ | |||

| Azure Firewall | ✅ | ✅ | ||

| Kubernetes Network Policies | ✅ |

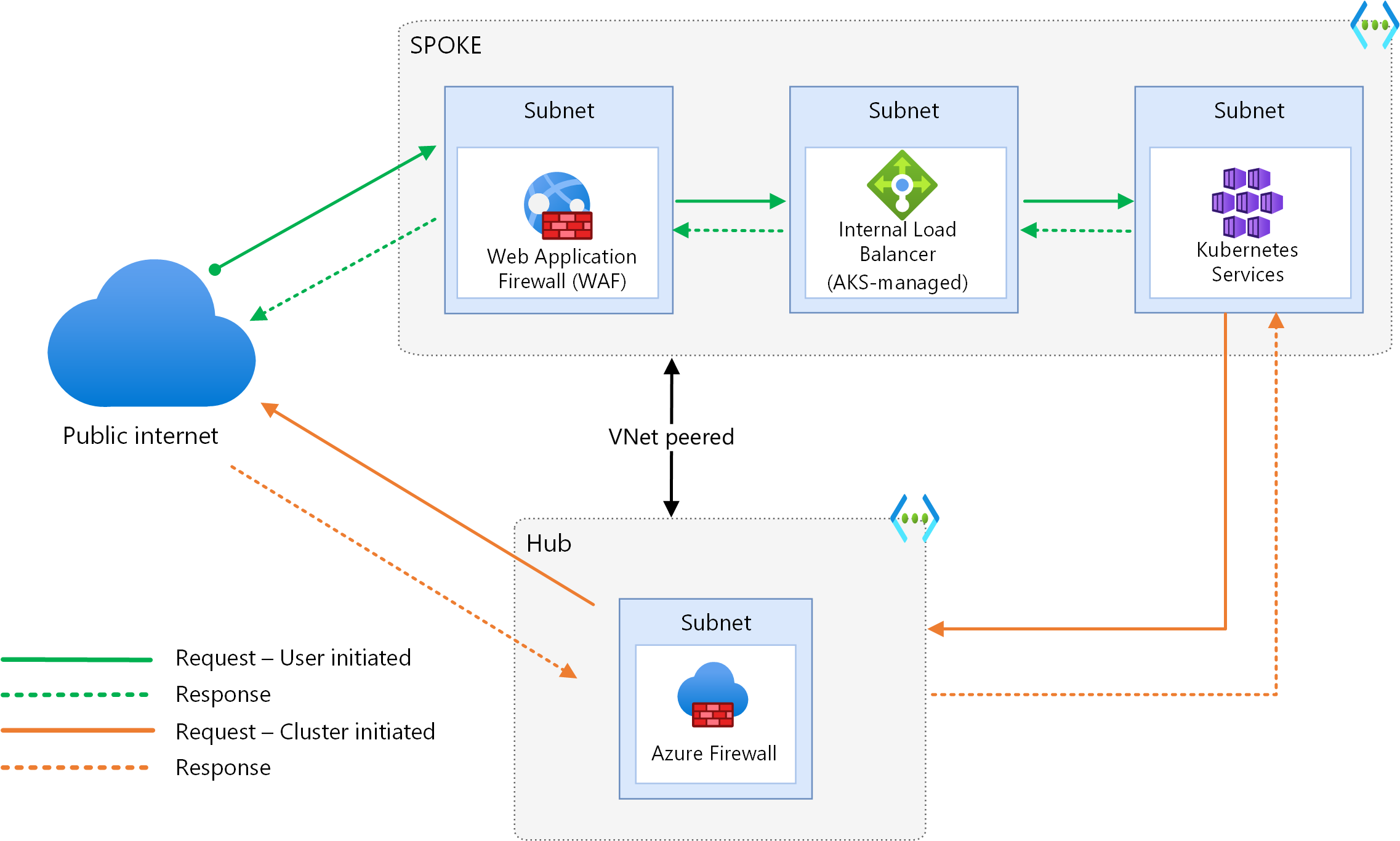

Firewall and Application Gateway in parallel

Design reference chosen concerning Azure Firewall and Application Gateway

Design reference for overall Architecture

- Private cluster model (this actually simplifies the integration with Azure Firewall if you are doing egress filtering in Azure Firewall)

- API server is only available via private IP, but there is public DNS record (this simplifies management quite the bit)

- Egress traffic is routed via Azure Firewall

- Ingress traffic outside to the cluster for web workloads traverses via AGIC enabled with Azure Web Application Firewall

- Inter-cluster workloads are protected by network policy

- Traffic to Azure PaaS Service traverses via Azure Firewall ( you could also bypass Firewall in these scenarios, especially for VNET service endpoints, but there is benefit of centralization in terms of logging and connecting via Azure Firewall)

AKS Rule 1 – Plan for change

Design your AKS Architecture in a way that you can redeploy the cluster just like you would redeploy an application. The basis of this idea is that AKS is fast moving target. You will eventually be in a situation where in-place upgrade of cluster does not give the security enhancement you are looking for – Good example is Network Policies (1), if you had not enabled those in the first place, you won’t be enabling them later either, (network policy does not support in-place upgrade).

Plan the cluster to be rather a supporting piece for the application, that has seperate flexible lifecycle, as opposed to permanent service generating technical dept.

Briefly about network policies

Since you cant use NSG’s and inter-subnet NSG rules in AKS you must then use network policies to limit any traffic you want to have locked down inside the cluster

”Blocking internal subnet traffic using network security groups (NSGs) and firewalls is not supported. To control and block the traffic within the cluster, use Network Policies.” https://docs.microsoft.com/en-us/azure/aks/limit-egress-traffic#background

Use network policies to segregate and secure intra-service communications by controlling which components can communicate with each other. By default, all pods in a Kubernetes cluster can send and receive traffic without limitations. To improve security, you can use Azure Network Policies or Calico Network Policies to define rules that control the traffic flow between different microservices. For more information, see Network Policy.

Routing 0.0.0.0/0

At first glimpse the 0.0.0.0/0 user defined route with the next-hop address to Azure Firewall might feel quite obvious. All egress traffic should flow there? Or should it… MS has great article on this, but to summarize:

- Egress Internet traffic goes to AZFW

- VNET service endpoint delegations on AKS Subnet bypass firewall

- More here about what might not follow the 0.0.0.0 rule :

- If you place the VNET service endpoint policy to Azure Firewall subnet, and remove it from AKS subnet, you will get centralized access point to PaaS services enabled for Service Endpoint via Azure Firewall

- Response to traffic from AGIC flows back to AGIC and so on.

- Cluster and inter-pod inter-vnet-subnet traffic stays within

- More here about what might not follow the 0.0.0.0 rule :

Configuration

Click the picture for full version

AZ CLI reference for the cluster

Notes from testing

# Base variables, including AAD Admin group, names of groups etc

NAME=akssvc4

NAMER=RG-aks-refInstall

AADG=b8ae1f70-af1f-4b68-bb49-2f99f40758c1

LOCATION=westeurope

NET="AKS-VNET_SR"

TAGS="svc=aksdev"

ACR=acraksa

FWPrivateIp=172.19.0.4

RT=podRoute

FWNET="vnet-azfw"

AZRG="rg-net-hub"

az group create -n $NAMER \

-l $LOCATION \

--tags $TAGS

az network route-table create --name ${RT}_PODS \

--resource-group $NAMER \

--tags $TAGS

az network route-table route create -g $NAMER --route-table-name ${RT}_PODS -n ${RT}_PODS_RULE \

--next-hop-type VirtualAppliance --address-prefix 0.0.0.0/0 --next-hop-ip-address $FWPrivateIp

az network vnet create --name ${NET} \

--resource-group ${NAMER} \

--address-prefix 10.27.0.0/16 \

--subnet-name ${NET}_AKS \

--subnet-prefix 10.27.1.0/24 \

--tags $TAGS

VNET_ID=$(az network vnet show --resource-group ${NAMER} --name ${NET} --query id -o tsv)

VNET_ID2=$(az network vnet show --resource-group ${AZRG} --name ${FWNET} --query id -o tsv)

# Create Peer

ASSIGNEDID=$(az identity create --name ${NAME}_CONTROL_PLANE -g ${NAMER} --tags $TAGS --query id -o tsv)

az network vnet peering create -g $NAMER -n ${NET}_AKS_PEER --vnet-name ${NET} \

--remote-vnet $VNET_ID2 --allow-vnet-access --allow-forwarded-traffic

az network vnet peering create -g $AZRG -n ${FWNET}_AKS_PEER --vnet-name ${FWNET} \

--remote-vnet $VNET_ID --allow-vnet-access --allow-forwarded-traffic

#Create AKS RES SUBNET

az network vnet subnet create -g ${NAMER} --vnet-name ${NET} -n ${NET}_AGIC \

--address-prefixes 10.27.2.0/24 \

--route-table ${RT}_PODS

SUBNET_ID1=$(az network vnet subnet show --resource-group ${NAMER} --vnet-name ${NET} --name ${NET}_AKS --query id -o tsv)

SUBNET_ID2=$(az network vnet subnet show --resource-group ${NAMER} --vnet-name ${NET} --name ${NET}_AGIC --query id -o tsv)

az aks create \

-n ${NAME} -g ${NAMER} \

--enable-private-cluster \

--enable-public-fqdn \

--enable-pod-identity \

--enable-aad \

--aad-admin-group-object-ids $AADG \

-l $LOCATION \

--disable-local-accounts \

--enable-azure-rbac \

--network-plugin azure \

--private-dns-zone none \

--network-policy azure \

-a ingress-appgw --appgw-name AKS-AGIC \

--appgw-subnet-id $SUBNET_ID1 \

--tags $TAGS \

--vnet-subnet-id ${SUBNET_ID1} \

--node-resource-group ${NAMER}_AZ_RES \

--attach-acr $ACR \

--assign-identity $ASSIGNEDID

Azure Key Vault logs for POD identity accessing Azure Key Vault via service endpoint now show the Azure FW subnet internal IP address as the clientIP

hello, thanks for this article. I had a question. in an AKS Cluster environment with Nginx ingress controller is it possible for incoming traffic to use an application gateway in front of an azure firewall. I haven’t been able to find any similar architecture. thank for your help. regards

HENNEBERT Mickael

TykkääTykkää

Sorry for super late reply! I think if AGW is in the front, you might lose some ip based rules in azure firewall, also I am not sure if the agw will work as part ingress config in aks, but rather as something that points azure firewall. There has been lot of updates in aks side, so more recent info might be available

TykkääTykkää